C |

| C is a general-purpose, imperative computer programming language, supporting structured programming, lexical variable scope and recursion, while a static type system prevents many unintended operations. By design, C provides constructs that map efficiently to typical machine instructions, and therefore it has found lasting use in applications that had formerly been coded in assembly language, including operating systems, as well as various application software for computers ranging from supercomputers to embedded systems.

C was originally developed by Dennis Ritchie between 1969 and 1973 at Bell Labs, and used to re-implement the Unix operating system. It has since become one of the most widely used programming languages of all time,[8][9] with C compilers from various vendors available for the majority of existing computer architectures and operating systems. C has been standardized by the American National Standards Institute (ANSI) since 1989 (see ANSI C) and subsequently by the International Organization for Standardization (ISO).

C is an imperative procedural language. It was designed to be compiled using a relatively straightforward compiler, to provide low-level access to memory, to provide language constructs that map efficiently to machine instructions, and to require minimal run-time support. Despite its low-level capabilities, the language was designed to encourage cross-platform programming. A standards-compliant and portably written C program can be compiled for a very wide variety of computer platforms and operating systems with few changes to its source code. The language has become available on a very wide range of platforms, from embedded microcontrollers to supercomputers. |

C# |

| C# is a multi-paradigm programming language encompassing strong typing, imperative, declarative, functional, generic, object-oriented (class-based), and component-oriented programming disciplines. It was developed around 2000 by Microsoft within its .NET initiative and later approved as a standard by Ecma (ECMA-334) and ISO (ISO/IEC 23270:2006). C# is one of the programming languages designed for the Common Language Infrastructure.

C# is a general-purpose, object-oriented programming language. |

C++ |

| C++ is a general-purpose programming language. It has imperative, object-oriented and generic programming features, while also providing facilities for low-level memory manipulation.

It was designed with a bias toward system programming and embedded, resource-constrained and large systems, with performance, efficiency and flexibility of use as its design highlights. C++ has also been found useful in many other contexts, with key strengths being software infrastructure and resource-constrained applications, including desktop applications, servers (e.g. e-commerce, Web search or SQL servers), and performance-critical applications (e.g. telephone switches or space probes). C++ is a compiled language, with implementations of it available on many platforms. Many vendors provide C++ compilers, including the Free Software Foundation, Microsoft, Intel, and IBM.

C++ is standardized by the International Organization for Standardization (ISO), with the latest standard version ratified and published by ISO in December 2017 as ISO/IEC 14882:2017 (informally known as C+17). The C+ programming language was initially standardized in 1998 as ISO/IEC 14882:1998, which was then amended by the C+03, C11 and C14 standards. The current C17 standard supersedes these with new features and an enlarged standard library. Before the initial standardization in 1998, C+ was developed by Bjarne Stroustrup at Bell Labs since 1979, as an extension of the C language as he wanted an efficient and flexible language similar to C, which also provided high-level features for program organization. C++20 is the next planned standard thereafter. |

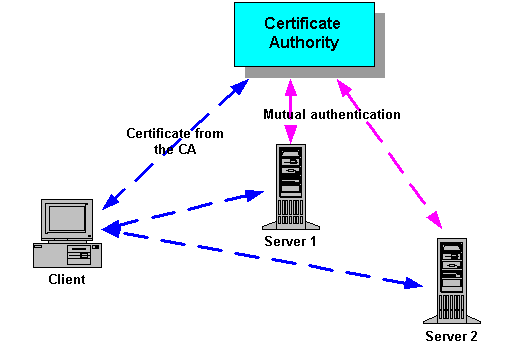

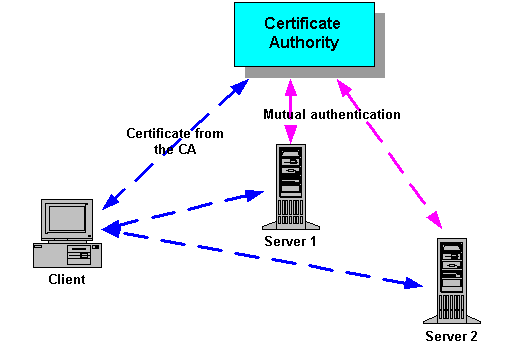

CA | Certificate Authority | In cryptography, a certificate authority or certification authority (CA) is an entity that issues digital certificates. A digital certificate certifies the ownership of a public key by the named subject of the certificate. This allows others (relying parties) to rely upon signatures or on assertions made about the private key that corresponds to the certified public key. A CA acts as a trusted third party—trusted both by the subject (owner) of the certificate and by the party relying upon the certificate. The format of these certificates is specified by the X.509 standard.

One particularly common use for certificate authorities is to sign certificates used in HTTPS, the secure browsing protocol for the World Wide Web. Another common use is in issuing identity cards by national governments for use in electronically signing documents.

|

Cacti |

| Cacti is an open-source, web-based network monitoring and graphing tool designed as a front-end application for the open-source, industry-standard data logging tool RRDtool. Cacti allows a user to poll services at predetermined intervals and graph the resulting data. It is generally used to graph time-series data of metrics such as CPU load and network bandwidth utilization. A common usage is to monitor network traffic by polling a network switch or router interface via Simple Network Management Protocol (SNMP). |

Cadence |

| the number of days or weeks in a Sprint or release; the length of the team's development cycle. |

Calendar Days |

| Time measure of the number of work days an activity takes.

The relationship between staff days and calendar days depends on how the work is scheduled. |

CAM Item |

| Corrective activity management item. This is an abstract term referring to a change request, defect, risk, or issue that is being managed in a CAM process. |

CAMEL | Customized Applications for Mobile networks Enhanced Logic | Customized Applications for Mobile networks Enhanced Logic (CAMEL) is a set of standards designed to work on either a GSM core network or the Universal Mobile Telecommunications System (UMTS) network. The framework provides tools for operators to define additional features for standard GSM services/UMTS services. The CAMEL architecture is based on the Intelligent Network (IN) standards, and uses the CAP protocol. The protocols are codified in a series of ETSI Technical Specifications.

Many services can be created using CAMEL, and it is particularly effective in allowing these services to be offered when a subscriber is roaming, like, for instance, no-prefix dialing (the number the user dials is the same no matter the country where the call is placed) or seamless MMS message access from abroad. |

Camel Case |

| Camel case (stylized as camelCase or CamelCase; also known as camel caps or more formally as medial capitals) is the practice of writing compound words or phrases such that each word or abbreviation in the middle of the phrase begins with a capital letter, with no intervening spaces or punctuation. Common examples include "iPhone", "eBay", "FedEx", "DreamWorks", and "HarperCollins". It is also sometimes used in online usernames such as "JohnSmith", and to make multi-word domain names more legible, for example in advertisements.

Programming and coding

The use of medial caps for compound identifiers is recommended by the coding style guidelines of many organizations or software projects. For some languages (such as Mesa, Pascal, Modula, Java and Microsoft's .NET) this practice is recommended by the language developers or by authoritative manuals and has therefore become part of the language's "culture".

Style guidelines often distinguish between upper and lower camel case, typically specifying which variety should be used for specific kinds of entities: variables, record fields, methods, procedures, types, etc. These rules are sometimes supported by static analysis tools that check source code for adherence.

The original Hungarian notation for programming, for example, specifies that a lowercase abbreviation for the "usage type" (not data type) should prefix all variable names, with the remainder of the name in upper camel case; as such it is a form of lower camel case.

Programming identifiers often need to contain acronyms and initialisms that are already in uppercase, such as "old HTML file". By analogy with the title case rules, the natural camel case rendering would have the abbreviation all in uppercase, namely "oldHTMLFile". However, this approach is problematic when two acronyms occur together (e.g., "parse DBM XML" would become "parseDBMXML") or when the standard mandates lower camel case but the name begins with an abbreviation (e.g. "SQL server" would become "sQLServer"). For this reason, some programmers prefer to treat abbreviations as if they were lowercase words and write "oldHtmlFile", "parseDbmXml" or "sqlServer". However, this can make it harder to recognise that a given word is intended as an acronym.

|

CAP | CAMEL Application Part | The CAMEL Application Part (CAP) is a signalling protocol used in the Intelligent Network (IN) architecture. CAP is a Remote Operations Service Element (ROSE) user protocol, and as such is layered on top of the Transaction Capabilities Application Part (TCAP) of the SS#7 protocol suite. CAP is based on a subset of the ETSI Core and allows for the implementation of carrier-grade, value added services such as unified messaging, prepaid, fraud control and Freephone in both the GSM voice and GPRS data networks. CAMEL is a means of adding intelligent applications to mobile (rather than fixed) networks. It builds upon established practices in the fixed line telephony business that are generally classed under the heading of (Intelligent Network Application Part) or INAP CS-2 protocol |

Capability Maturity Model |

| The Capability Maturity Model (CMM) is a development model created after a study of data collected from organizations that contracted with the U.S. Department of Defense, who funded the research. The term "maturity" relates to the degree of formality and optimization of processes, from ad hoc practices, to formally defined steps, to managed result metrics, to active optimization of the processes.

The model's aim is to improve existing software development processes, but it can also be applied to other processes.

Structure

The model involves five aspects: Maturity Levels: a 5-level process maturity continuum - where the uppermost (5th) level is a notional ideal state where processes would be systematically managed by a combination of process optimization and continuous process improvement. Key Process Areas: a Key Process Area identifies a cluster of related activities that, when performed together, achieve a set of goals considered important. Goals: the goals of a key process area summarize the states that must exist for that key process area to have been implemented in an effective and lasting way. The extent to which the goals have been accomplished is an indicator of how much capability the organization has established at that maturity level. The goals signify the scope, boundaries, and intent of each key process area. Common Features: common features include practices that implement and institutionalize a key process area. There are five types of common features: commitment to perform, ability to perform, activities performed, measurement and analysis, and verifying implementation. Key Practices: The key practices describe the elements of infrastructure and practice that contribute most effectively to the implementation and institutionalization of the area.

Levels

There are five levels defined along the continuum of the model and, according to the SEI: "Predictability, effectiveness, and control of an organization's software processes are believed to improve as the organization moves up these five levels. While not rigorous, the empirical evidence to date supports this belief". Initial (chaotic, ad hoc, individual heroics) - the starting point for use of a new or undocumented repeat process. Repeatable - the process is at least documented sufficiently such that repeating the same steps may be attempted. Defined - the process is defined/confirmed as a standard business process Capable - the process is quantitatively managed in accordance with agreed-upon metrics. Efficient - process management includes deliberate process optimization/improvement.

Within each of these maturity levels are Key Process Areas which characterise that level, and for each such area there are five factors: goals, commitment, ability, measurement, and verification. These are not necessarily unique to CMM, representing — as they do — the stages that organizations must go through on the way to becoming mature.

The model provides a theoretical continuum along which process maturity can be developed incrementally from one level to the next. Skipping levels is not allowed/feasible.

|

Cat 5 |

| Category 5 cable, commonly referred to as Cat 5, is a twisted pair cable for computer networks. The cable standard provides performance of up to 100 MHz and is suitable for most varieties of Ethernet over twisted pair. Cat 5 is also used to carry other signals such as telephony and video.

This cable is commonly connected using punch-down blocks and modular connectors. Most Category 5 cables are unshielded, relying on the balanced line twisted pair design and differential signaling for noise rejection.

The category 5 specification was deprecated in 2001 and is superseded by the category 5e specification. |

Cat 5e |

| See cat 5 |

Cat 6 |

| Category 6 cable, commonly referred to as Cat 6, is a standardized twisted pair cable for Ethernet and other network physical layers that is backward compatible with the Category 5/5e and Category 3 cable standards.

Compared with Cat 5 and Cat 5e, Cat 6 features more stringent specifications for crosstalk and system noise. The cable standard also specifies performance of up to 250 MHz compared to 100 MHz for Cat 5 and Cat 5e.

Whereas Category 6 cable has a reduced maximum length of 55 meters when used for 10GBASE-T, Category 6A cable (or Augmented Category 6) is characterized to 500 MHz and has improved alien crosstalk characteristics, allowing 10GBASE-T to be run for the same 100 meter maximum distance as previous Ethernet variants. |

CCITT | Comité Consultatif International Téléphonique et Télégraphique | See ITU-T |

CCS | Common Channel Signalling | In telephony, common-channel signaling (CCS), in the US also common-channel interoffice signaling (CCIS), is the transmission of signaling information (control information) on a separate channel than the data, and, more specifically, where that signaling channel controls multiple data channels.

For example, in the public switched telephone network (PSTN) one channel of a communications link is typically used for the sole purpose of carrying signaling for establishment and tear down of telephone calls. The remaining channels are used entirely for the transmission of voice data. In most cases, a single 64kbit/s channel is sufficient to handle the call setup and call clear-down traffic for numerous voice and data channels.

The logical alternative to CCS is channel-associated signaling (CAS), in which each bearer channel has a signaling channel dedicated to it.

CCS offers the following advantages over CAS, in the context of the PSTN: Faster call set-up time Greater trunking efficiency due to the quicker set up and clearing, thereby reducing traffic on the network Can transfer additional information along with the signaling traffic, providing features such as caller ID Signaling can be performed mid-call

The most common CCS signaling methods in use today are Integrated Services Digital Network (ISDN) and Signalling System No. 7 (SS7).

ISDN signaling is used primarily on trunks connecting end-user private branch exchange (PBX) systems to a central office. SS7 is primarily used within the PSTN. The two signaling methods are very similar since they share a common heritage and in some cases, the same signaling messages are transmitted in both ISDN and SS7.

|

CCTA | Central Computer and Telecommunications Agency | The Central Computer and Telecommunications Agency (CCTA) was a UK government agency providing computer and telecoms support to government departments.

|

CDMA | Code-division multiple access | Code-division multiple access (CDMA) is a channel access method used by various radio communication technologies.

CDMA is an example of multiple access, where several transmitters can send information simultaneously over a single communication channel. This allows several users to share a band of frequencies . To permit this without undue interference between the users, CDMA employs spread spectrum technology and a special coding scheme (where each transmitter is assigned a code).

CDMA is used as the access method in many mobile phone standards. IS-95, also called "cdmaOne", and its 3G evolution CDMA2000, are often simply referred to as "CDMA", but UMTS, the 3G standard used by GSM carriers, also uses "wideband CDMA", or W-CDMA, as well as TD-CDMA and TD-SCDMA, as its radio technologies. |

CDMA2000 | Code-division multiple access 2000 | CDMA2000 (also known as C2K or IMT Multi Carrier (IMT MC)) is a family of 3G mobile technology standards for sending voice, data, and signaling data between mobile phones and cell sites. It is developed by 3GPP2 as a backwards-compatible successor to second-generation cdmaOne (IS-95) set of standards and used especially in North America and South Korea.

CDMA2000 compares to UMTS, a competing set of 3G standards, which is developed by 3GPP and used in Europe, Japan, and China. |

CDR | Call Detail Record | A call detail record (CDR) is a data record produced by a telephone exchange or other telecommunications equipment that documents the details of a telephone call or other telecommunications transaction (e.g., text message) that passes through that facility or device. The record contains various attributes of the call, such as time, duration, completion status, source number, and destination number. |

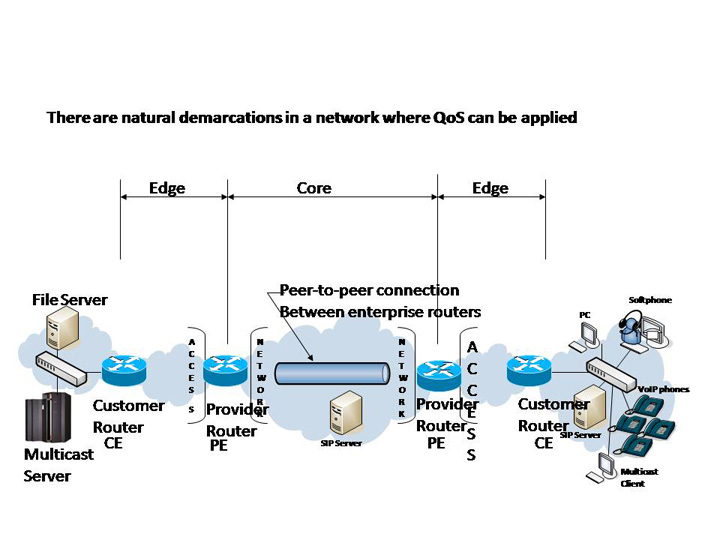

CE | Customer Edge | The customer edge (CE) is the router at the customer premises that is connected to the provider edge of a service provider IP/MPLS network. CE peers with the Provider Edge (PE) and exchanges routes with the corresponding VRF inside the PE. The routing protocol used could be static or dynamic (an interior gateway protocol like OSPF or an exterior gateway protocol like BGP).

|

CER | Canonical Encoding Rules | CER (Canonical Encoding Rules) is a restricted variant of BER for producing unequivocal transfer syntax for data structures described by ASN.1. Whereas BER gives choices as to how data values may be encoded, CER (together with DER) selects just one encoding from those allowed by the basic encoding rules, eliminating rest of the options. CER is useful when the encodings must be preserved; e.g., in security exchanges. |

Ceremonies |

| meetings, often a daily planning meeting, that identify what has been done, what is to be done and the barriers to success. |

CESG | Communication-Electronics Security Group | CESG is the UK government's national technical authority for information assurance (IA). It protects the UK by providing policy and assistance on the security of communications and electronic data, in partnership with industry and academia.

The group is known as CESG. It dropped the expanded name of Communications-Electronics Security Group in 2002 because it no longer described the full extent of its work. CESG has existed since World War I when it advised on the security of British codes and ciphers. Since 1997, it has charged a fee for most of the information security services it offers rather than being funded by central government.

UK central government departments and agencies and the armed forces are CESG's main customers. CESG also works with the wider public sector, including health service, law enforcement, local government and the utility companies that provide the services that form the UK's critical national infrastructure.

CESG provides information assurance products and services and accreditation for consultants in industry. It also produces policy and guidance on biometrics and runs GovCertUK, the Computer Emergency Response Team (CERT) for UK government, assisting public sector organizations in their response to computer security incidents and providing advice to reduce their exposure to security threats.

Other services provided by CESG include Information Assurance Maturity Model (IAMM) assessment services for organisations wishing to check their progress towards the National IA Strategy and security awareness training for government clients. |

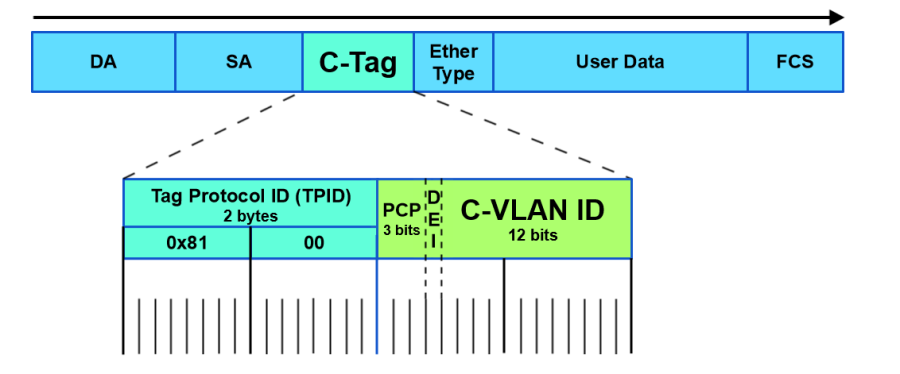

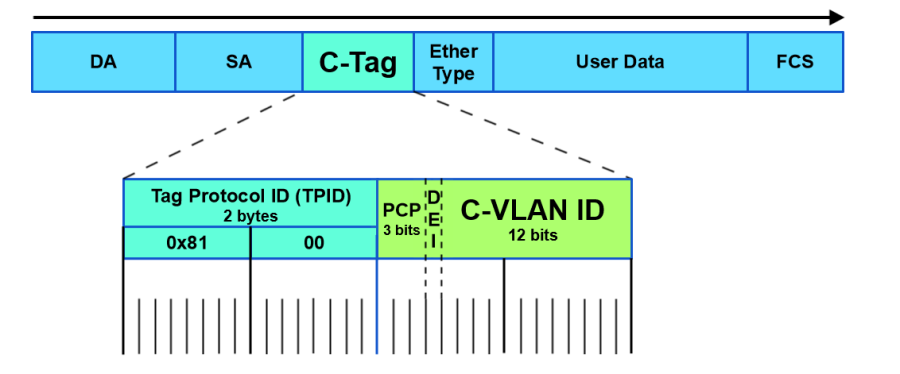

CFi | Canonical Format Indicator | A bit in an IEEE 802.1Q frame.

The Canonical Format Indicator (CFI) bit indicates whether the following 12 bits of VLAN identifier conform to Ethernet or not. For Ethernet frames, this bit is always set to 0. (The other possible value, CFI=1, is used for Token Ring LANs, and tagged frames should never be bridged between an Ethernet and Token Ring LAN regardless of the VLAN tag or MAC address.) |

CGI | Common Gateway Interface | n computing, Common Gateway Interface (CGI) offers a standard protocol for web servers to execute programs that execute like console applications (also called command-line interface programs) running on a server that generates web pages dynamically. Such programs are known as CGI scripts or simply as CGIs. The specifics of how the script is executed by the server are determined by the server. In the common case, a CGI script executes at the time a request is made and generates HTML. |

Change Control | CC | A subset of change management concerned with identifying artifacts that will be placed under the control of a change control board. Change control may refer to explicit change control or implicit change control.

Also a synonym for change management. |

Change Control Board | CCB | The group of individuals responsible for processing and making final decisions on change requests to the artifacts under change control. |

Change Control Plan | CCP | Documents the types and levels of change control used on project artifacts. |

Change Management |

| Systematic management of feature, scope, or other requested changes to an artifact or project. Part of both configuration management and corrective activity management. |

Change Request | CR | A request to change an item under change control.

Usually a request to add, modify, or remove a system requirement based on a business need. May also be a request to change project planning. Change requests are a type of corrective activity management item. |

CHAP | Challenge Handshake Authentication Protocol | In computing, the Challenge-Handshake Authentication Protocol (CHAP) authenticates a user or network host to an authenticating entity. That entity may be, for example, an Internet service provider. CHAP is specified in RFC 1994.

CHAP provides protection against replay attacks by the peer through the use of an incrementally changing identifier and of a variable challenge-value. CHAP requires that both the client and server know the plaintext of the secret, although it is never sent over the network. Thus, CHAP provides better security as compared to Password Authentication Protocol (PAP) which is vulnerable for both these reasons. The MS-CHAP variant does not require either peer to know the plaintext and does not transmit it, but has been broken |

Chrome OS |

| Chrome OS is an operating system designed by Google that is based on the Linux kernel and uses the Google Chrome web browser as its principal user interface. As a result, Chrome OS primarily supports web applications. |

Circuit Switched |

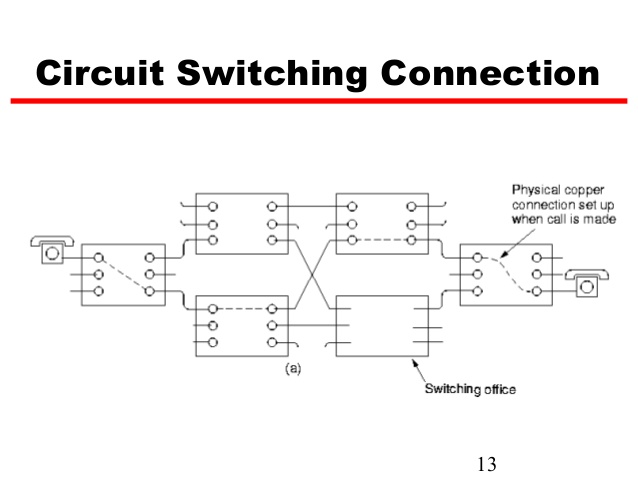

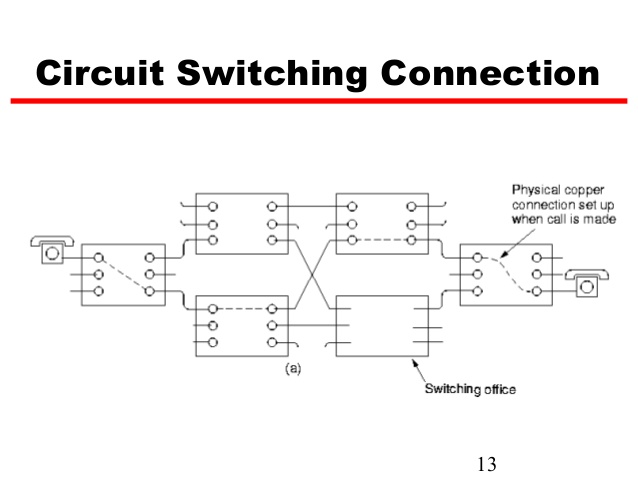

| Circuit switching is a method of implementing a telecommunications network in which two network nodes establish a dedicated communications channel (circuit) through the network before the nodes may communicate. The circuit guarantees the full bandwidth of the channel and remains connected for the duration of the communication session. The circuit functions as if the nodes were physically connected as with an electrical circuit.

|

Class 4 Softswitch |

| Softswitches used for transit VoIP traffic between carriers are usually called class 4 softswitches. Analogous with other Class 4 telephone switches, the main function of the class 4 softswitch is the routing of large volumes of long distance VoIP calls. The most important characteristics of class 4 softswitch are protocol support and conversion, transcoding, calls per second rate, average time of one call routing, number of concurrent calls. |

Class 5 Softswitch |

| Class 5 softswitches are intended for work with end-users. These softswitches are both for local and long distance telephony services. Class 5 softswitches are characterized by additional services for end-users and corporate clients such as IP PBX features, call center services, calling card platform, types of authorization, QoS, Business Groups and other features similar to other Class 5 telephone switches. Class 5 Softswitches may also provide analog twisted-pair POTS Access to subscribers homes using special Central Office hardware like ATA's, EMTA's, IAD's, And General-Purpose PBX's. |

Class Model |

| An internal object oriented view of a system showing the static class structure. |

CLF | Subscriber Location Function | A subscriber location function (SLF) is needed to map user addresses when multiple HSSs are used.

|

CMDB | Configuration Management Database | A Configuration Management Database (CMDB) is an ITIL database used by an organization to store information about hardware and software assets (commonly referred to as Configuration Items [CI]). This database acts as a data warehouse for the organization and also stores information regarding the relationship between its assets. The CMDB provides a means of understanding the organization's critical assets and their relationships, such as information systems, upstream sources or dependencies of assets, and the downstream targets of assets. |

CMDB | configuration management database | A configuration management database (CMDB) is a data repository that acts as a data warehouse or inventory for information technology (IT) installations. It holds data relating to a collection of IT assets (commonly referred to as configuration items (CI)), as well as to descriptive relationships between such assets. The repository provides a means of understanding: |

CNG | Comfort Noise Generation | Comfort noise (or comfort tone) is synthetic background noise used in radio and wireless communications to fill the artificial silence in a transmission resulting from voice activity detection or from the audio clarity of modern digital lines.

Some modern telephone systems (such as wireless and VoIP) use voice activity detection (VAD), a form of squelching where low volume levels are ignored by the transmitting device. In digital audio transmissions, this saves bandwidth of the communications channel by transmitting nothing when the source volume is under a certain threshold, leaving only louder sounds (such as the speaker's voice) to be sent. However, improvements in background noise reduction technologies can occasionally result in the complete removal of all noise. Although maximizing call quality is of primary importance, exhaustive removal of noise may not properly simulate the typical behavior of terminals on the PSTN system.

The result of receiving total silence, especially for a prolonged period, has a number of unwanted effects on the listener, including the following:

the listener may believe that the transmission has been lost, and therefore hang up prematurely.

the speech may sound "choppy" (see noise gate) and difficult to understand.

the sudden change in sound level can be jarring to the listener.

To counteract these effects, comfort noise is added, usually on the receiving end in wireless or VoIP systems, to fill in the silent portions of transmissions with artificial noise. The noise generated is at a low but audible volume level, and can vary based on the average volume level of received signals to minimize jarring transitions.

In many VoIP products, users may control how VAD and comfort noise are configured, or disable the feature entirely.

As part of the RTP audio video profile, RFC 3389 defines a standard for distributing comfort noise information in VoIP systems.

A similar concept is that of sidetone, the effect of sound that is picked up by a telephone's mouthpiece and introduced (at low level) into the earpiece of the same handset, acting as feedback. |

Cobol |

| COBOL is a compiled English-like computer programming language designed for business use. It is imperative, procedural and, since 2002, object-oriented. COBOL is primarily used in business, finance, and administrative systems for companies and governments. COBOL is still widely used in legacy applications deployed on mainframe computers, such as large-scale batch and transaction processing jobs. But due to its declining popularity and the retirement of experienced COBOL programmers, programs are being migrated to new platforms, rewritten in modern languages or replaced with software packages. Most programming in COBOL is now purely to maintain existing applications.

COBOL was designed in 1959 by CODASYL and was partly based on previous programming language design work by Grace Hopper, commonly referred to as "the (grand)mother of COBOL". It was created as part of a US Department of Defense effort to create a portable programming language for data processing. Intended as a stopgap, the Department of Defense promptly forced computer manufacturers to provide it, resulting in its widespread adoption. It was standardized in 1968 and has since been revised four times. Expansions include support for structured and object-oriented programming. The current standard is ISO/IEC 1989:2014.

COBOL statements have an English-like syntax, which were designed to be self-documenting and highly readable. However, it is verbose and uses over 300 reserved words. In contrast with modern, succinct syntax like y = x;, COBOL has a more English-like syntax (in this case, MOVE x TO y). COBOL code is split into four divisions (identification, environment, data and procedure) containing a rigid hierarchy of sections, paragraphs and sentences. Lacking a large standard library, the standard specifies 43 statements, 87 functions and just one class. |

Code and Fix Lifecycle |

| The system is started from a general concept and evolved through some combination of informal design, code, debug, and test methodologies until it is ready to release |

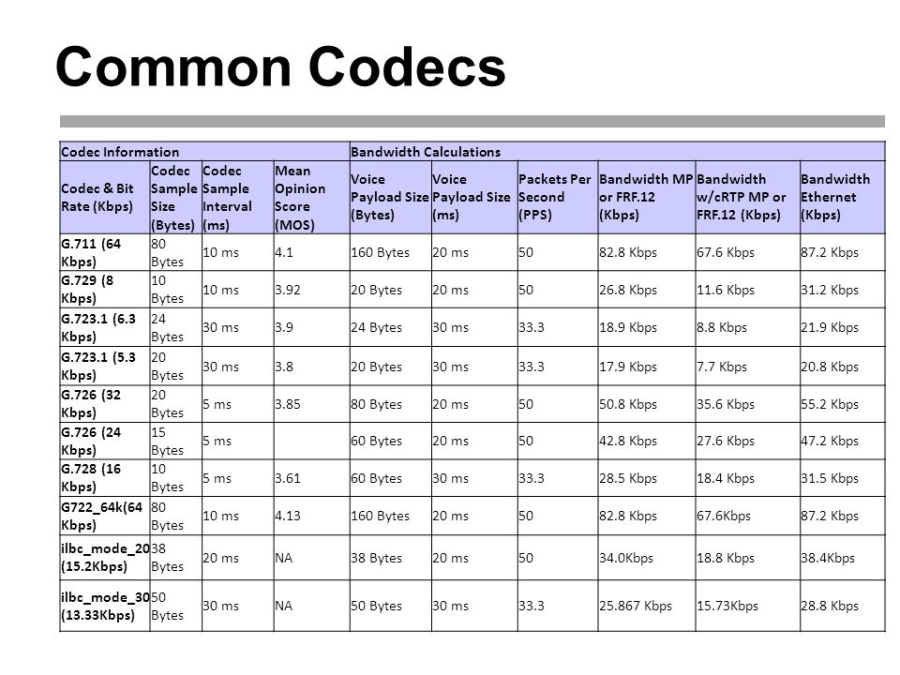

Codecs |

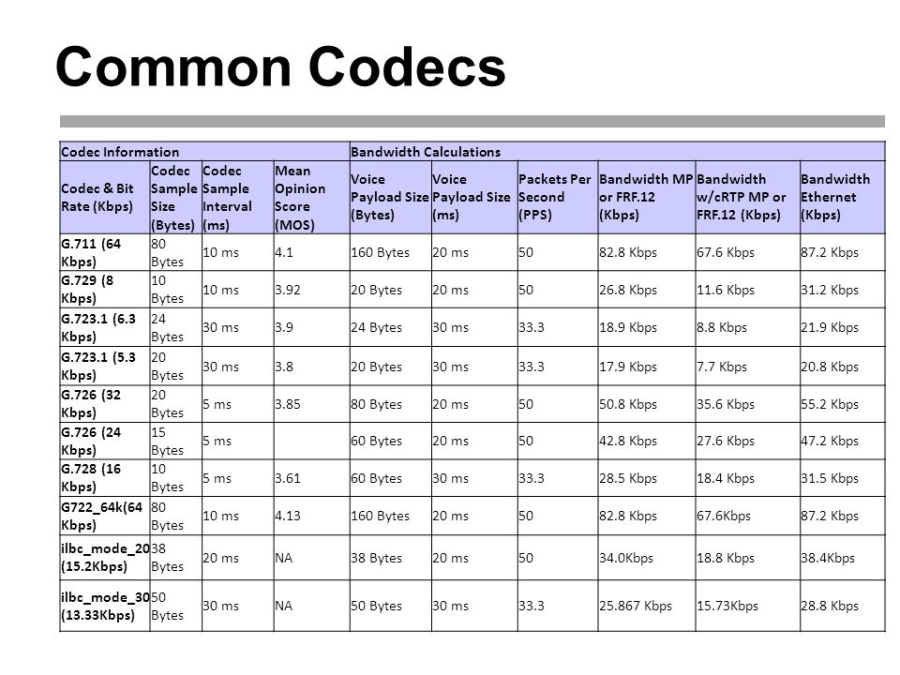

| A codec is a device or computer program for encoding or decoding a digital data stream or signal. Codec is a portmanteau of coder-decoder.

A codec encodes a data stream or a signal for transmission and storage, possibly in encrypted form, and the decoder function reverses the encoding for playback or editing. Codecs are used in videoconferencing, streaming media, and video editing applications.

|

Coding |

| The core activity of construction; involves creating source code instructions and/or data that define the behavior of a software system. |

Coding Standard |

| Synonym for Construction Standard. |

CoE | Centre of Excellence | A center of excellence (COE) is a team, a shared facility or an entity that provides leadership, best practices, research, support and/or training for a focus area. The focus area might be a technology (e.g. Java), a business concept (e.g. BPM), a skill (e.g. negotiation) or a broad area of study (e.g. women's health). A center of excellence may also be aimed at revitalizing stalled initiatives.

Within an organization, a center of excellence may refer to a group of people, a department or a shared facility. It may also be known as a competency center or a capability center. The term may also refer to a network of institutions collaborating with each other to pursue excellence in a particular area. |

COFDM | Coded orthogonal frequency-division multiplexing | In coded orthogonal frequency-division multiplexing (COFDM), forward error correction (convolutional coding) and time/frequency interleaving are applied to the signal being transmitted. This is done to overcome errors in mobile communication channels affected by multipath propagation and Doppler effects. |

Collaboration Model |

| Specifies the set of object roles and their interactions by showing and describing the messages exchanged. The focus is on the relationship between roles. |

Collaborative Construction | CCON

(see-kahn) | A technique used during construction where a small group of 2-6 engineers work together closely to incrementally construct system functionality. Marked by frequent, informal communication, iterative, code-oriented low level design techniques, and shared ownership of source code and test responsibilities. |

Collective code ownership |

| Collective code ownership is the explicit convention that every team member can make changes to any code file as necessary: either to complete a development task, to repair a defect, or to improve the code's overall structure. |

COLO | COlLOcated |

|

Compatibility Test |

| See configuration test. |

Compiled Language |

| A compiled language is a programming language whose implementations are typically compilers (translators that generate machine code from source code), and not interpreters (step-by-step executors of source code, where no pre-runtime translation takes place).

The term is somewhat vague. In principle, any language can be implemented with a compiler or with an interpreter. A combination of both solutions is also common: a compiler can translate the source code into some intermediate form (often called p-code or bytecode), which is then passed to an interpreter which executes it. |

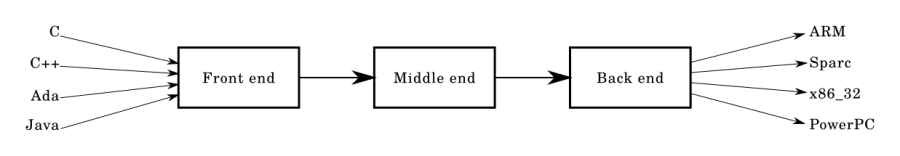

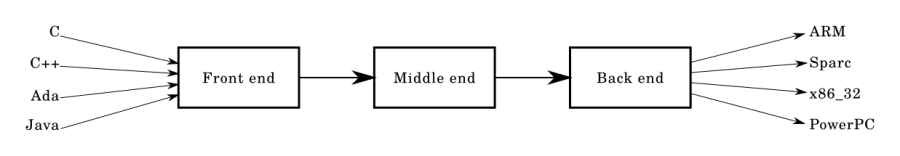

Compiler Back end |

| The back end takes the optimized IR from the middle end. It may perform more analysis, transformations and optimizations that are specific for the target CPU architecture. The back end generates the target-dependent assembly code, performing register allocation in the process. The back end performs instruction scheduling, which re-orders instructions to keep parallel execution units busy by filling delay slots. Although most algorithms for optimization are NP-hard, heuristic techniques are well-developed and currently implemented in production-quality compilers. Typically the output of a back end is machine code specialized for a particular processor and operating system. |

Compiler Front end |

| The front end verifies syntax and semantics according to a specific source language. For statically typed languages it performs type checking by collecting type information. If the input program is syntactically incorrect or has a type error, it generates errors and warnings, highlighting[dubious – discuss] them on the source code. Aspects of the front end include lexical analysis, syntax analysis, and semantic analysis. The front end transforms the input program into an intermediate representation (IR) for further processing by the middle end. This IR is usually a lower-level representation of the program with respect to the source code |

Compiler Middle end |

| The middle end performs optimizations on the IR that are independent of the CPU architecture being targeted. This source code/machine code independence is intended to enable generic optimizations to be shared between versions of the compiler supporting different languages and target processors. Examples of middle end optimizations are removal of useless (dead code elimination) or unreachable code (reachability analysis), discovery and propagation of constant values (constant propagation), relocation of computation to a less frequently executed place (e.g., out of a loop), or specialization of computation based on the context. Eventually producing the "optimized" IR that is used by the back end. |

Compiler preprocessor |

| In computer science, a preprocessor is a program that processes its input data to produce output that is used as input to another program. The output is said to be a preprocessed form of the input data, which is often used by some subsequent programs like compilers. The amount and kind of processing done depends on the nature of the preprocessor; some preprocessors are only capable of performing relatively simple textual substitutions and macro expansions, while others have the power of full-fledged programming languages.

A common example from computer programming is the processing performed on source code before the next step of compilation. In some computer languages (e.g., C and PL/I) there is a phase of translation known as preprocessing. It can also include macro processing, file inclusion and language extensions. |

Complier |

| A compiler is computer software that transforms computer code written in one programming language (the source language) into another programming language (the target language). Compilers are a type of translator that support digital devices, primarily computers. The name compiler is primarily used for programs that translate source code from a high-level programming language to a lower level language (e.g., assembly language, object code, or machine code) to create an executable program.

However, there are many different types of compilers. If the compiled program can run on a computer whose CPU or operating system is different from the one on which the compiler runs, the compiler is a cross-compiler. A bootstrap compiler is written in the language that it intends to compile. A program that translates from a low-level language to a higher level one is a decompiler. A program that translates between high-level languages is usually called a source-to-source compiler or transpiler. A language rewriter is usually a program that translates the form of expressions without a change of language. The term compiler-compiler refers to tools used to create parsers that perform syntax analysis.

A compiler is likely to perform many or all of the following operations: preprocessing, lexical analysis, parsing, semantic analysis (syntax-directed translation), conversion of input programs to an intermediate representation, code optimization and code generation. Compilers implement these operations in phases that promote efficient design and correct transformations of source input to target output. Program faults caused by incorrect compiler behavior can be very difficult to track down and work around; therefore, compiler implementers invest significant effort to ensure compiler correctness.

|

Component |

| Software component. An abstraction that refers to a part of a software system. |

Component Test |

| Test of a software component in isolation from its system. |

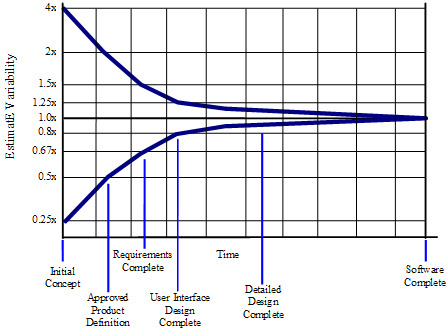

Cone of Uncertainty |

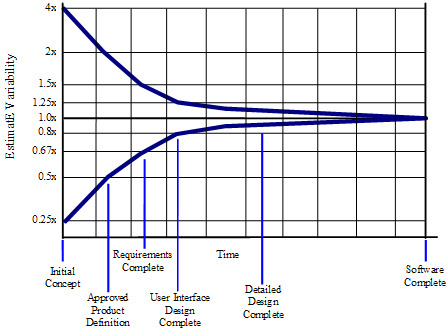

| Early in a project, specific details of the nature of the software to be built, details of specific requirements, details of the solution, project plan, staffing, and other project variables are unclear. The variability in these factors contributes variability to project estimates — an accurate estimate of a variable phenomenon must include the variability in the phenomenon itself. As these sources of variabiility are further investigated and pinned down, the variability in the project diminishes, and so the variability in the project estimatescan also diminish. This phenomenon is known as the "Cone of Uncertainty" which is illustrated in the following figure. As the figure suggests, significant narrowing of the Cone occur during the first 20-30% of the total calendar time for the project.

|

Cone of Uncertainty |

| The amount of possible error in a software project estimate, which is very large in the early stages of a project and shrinks dramatically as the project nears completion. |

Configuration Item | CI | A description of an artifact or group of artifacts that is identified by the configuration management plan. Configuration items are used to apply CM policies and processes to organizational and project artifacts. |

Configuration Management | CM | Activities and tasks related to defining, documenting, releasing, and maintaining the integrity of information in or about a system. |

Configuration Test |

| Test of a software system to determine behavior with different configurations, platforms, environments, etc. |

Construction |

| Software construction. Implementing a design to create a software system using technology. Also denotes the construction CKA. See CxStand_Construction for more information. |

Construction Environment |

| See development environment. |

Construction Lead |

| Responsible for construction, integration, product builds, technology issues, development environment, and deployment issues. |

Construction Standard |

| A standard describing detailed conventions, and styles that developers should follow when creating a system's source code or related construction artifacts. Coding Standard is a common synonym. |

Construction Test Environment |

| See local test environment. |

Construction Testing |

| A best practice that calls for several types of testing to be performed during the construction of a component, by the engineer(s) creating it, to verify additions or modifications both at the component level and in the context of the system. |

Construx Knowledge Area | CKA | The basis for organizing CxOne and other Construx software engineering resources. Based on the SWEBOK organization of software engineering.

Sometimes referred to as 'CxOne Knowledge Areas'. |

Continuous Deployment |

| Continuous deployment aims to reduce the time elapsed between writing a line of code and making that code available to users in production. To achieve continuous deployment, the team relies on infrastructure that automates and instruments the various steps leading up to deployment, so that after each integration successfully meeting these release criteria, the live application is updated with new code. |

continuous integration |

| In software engineering, continuous integration (CI) is the practice of merging all developer working copies to a shared mainline several times a day. Grady Booch first named and proposed CI in his 1991 method, although he did not advocate integrating several times a day. Extreme programming (XP) adopted the concept of CI and did advocate integrating more than once per day – perhaps as many as tens of times per day. |

Continuous Integration |

| Continuous Integration is the practice of merging code changes into a shared repository several times a day in order to release a product version at any moment. This requires an integration procedure which is reproducible and automated. |

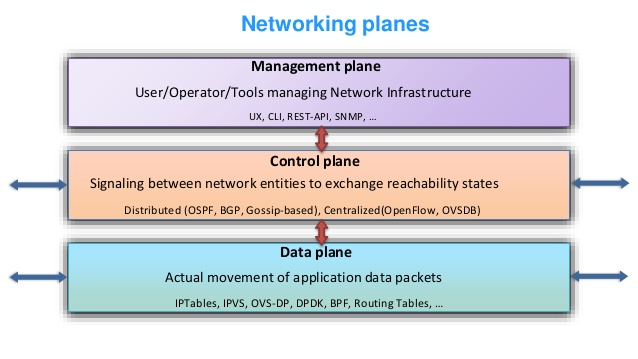

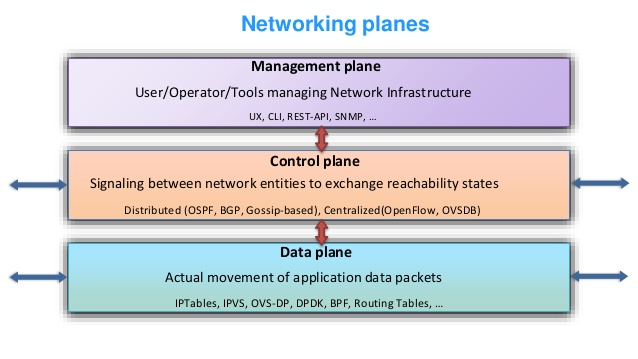

Control Plane |

| In routing, the control plane is the part of the router architecture that is concerned with drawing the network topology, or the information in a (possibly augmented) routing table that defines what to do with incoming packets. Control plane functions, such as participating in routing protocols, run in the architectural control element. In most cases, the routing table contains a list of destination addresses and the outgoing interface(s) associated with them. Control plane logic also can define certain packets to be discarded, as well as preferential treatment of certain packets for which a high quality of service is defined by such mechanisms as differentiated services.

Depending on the specific router implementation, there may be a separate forwarding information base that is populated (i.e., loaded) by the control plane, but used by the forwarding plane to look up packets, at very high speed, and decide how to handle them.

|

Copyleft |

| Copyleft (a play on the word copyright) is the practice of offering people the right to freely distribute copies and modified versions of a work with the stipulation that the same rights be preserved in derivative works down the line. Copyleft software licenses are considered protective or reciprocal, as contrasted with permissive free software licenses.

Copyleft is a form of licensing, and can be used to maintain copyright conditions for works ranging from computer software, to documents, to art, to scientific discoveries and instruments in medicine. In general, copyright law is used by an author to prohibit recipients from reproducing, adapting, or distributing copies of their work. In contrast, under copyleft, an author must give every person who receives a copy of the work permission to reproduce, adapt, or distribute it, with the accompanying requirement that any resulting copies or adaptations are also bound by the same licensing agreement.

Copyleft licenses for software require that information necessary for reproducing and modifying the work must be made available to recipients of the binaries. The source code files will usually contain a copy of the license terms and acknowledge the authors.

Copyleft type licenses are a novel use of existing copyright law to ensure a work remains freely available. The GNU General Public License (GPL), originally written by Richard Stallman, was the first software copyleft license to see extensive use, and continues to dominate in that area. Creative Commons, a non-profit organization founded by Lawrence Lessig, provides a similar license provision condition called share-alike.

|

Corrective Activity Management | CAM | The management of identified change requests, defects, risks, and issues.

CAM is a CxOne abstraction allowing reuse of materials and processes for the management of project work not explicitly identified in the project plan. |

CoS | Class of Service | Class of service is a parameter used in data and voice protocols to differentiate the types of payloads contained in the packet being transmitted. The objective of such differentiation is generally associated with assigning priorities to the data payload or access levels to the telephone call. |

COTS | Commercial off-the-shelf | Commercial off-the-shelf or commercially available off-the-shelf (COTS) is a term used to describe the purchase of packaged solutions which are then adapted to satisfy the needs of the purchasing organization, rather than the commissioning of custom-made, or bespoke, solutions. A related term, Mil-COTS, refers to COTS products for use by the U.S. military.

In the context of the U.S. government, the Federal Acquisition Regulation (FAR) has defined "COTS" as a formal term for commercial items, including services, available in the commercial marketplace that can be bought and used under government contract. For example, Microsoft is a COTS software provider. Goods and construction materials may qualify as COTS but bulk cargo does not. Services associated with the commercial items may also qualify as COTS, including installation services, training services, and cloud services.

COTS purchases are alternatives to custom software or one-off developments – government-funded developments or otherwise.

Although COTS products can be used out of the box, in practice the COTS product must be configured to achieve the needs of the business and integrated to existing organizational systems. Extending the functionality of COTS products via custom development is also an option, however this decision should be carefully considered due to the long term support and maintenance implications. Such customized functionality is not supported by the COTS vendor, so brings its own sets of issues when upgrading the COTS product. |

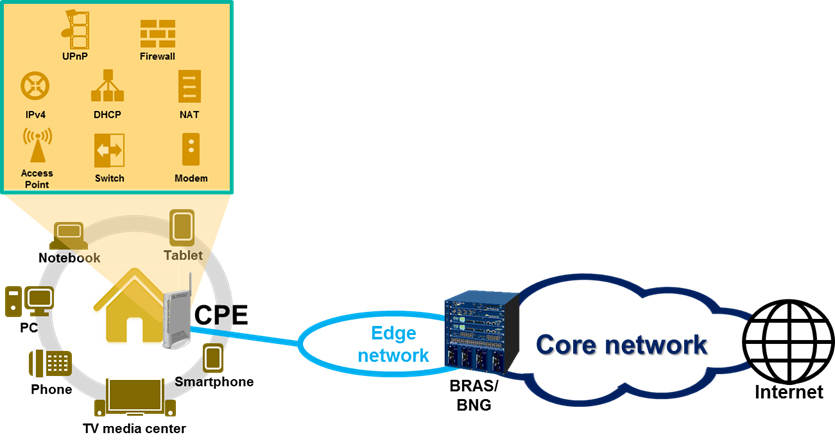

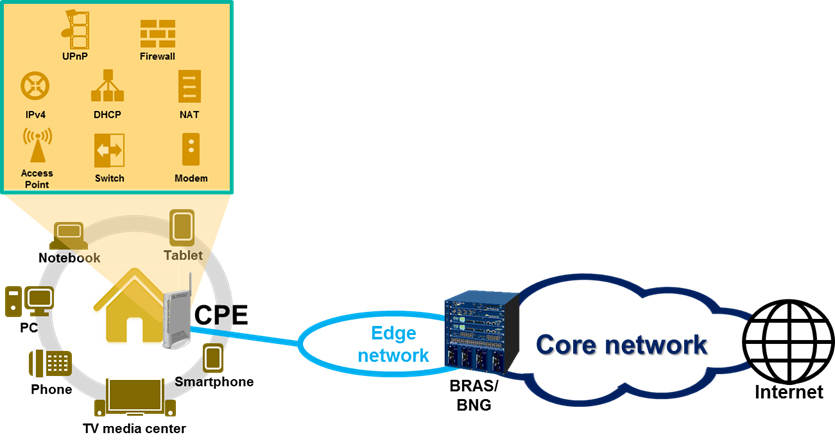

CPE | Customer Premise Equipment | Customer-premises equipment or customer-provided equipment (CPE) is any terminal and associated equipment located at a subscriber's premises and connected with a carrier's telecommunication circuit at the demarcation point

|

CPM | critical path method | The critical path method (CPM), or critical path analysis (CPA), is an algorithm for scheduling a set of project activities.[1] It is commonly used in conjunction with the program evaluation and review technique (PERT). |

CPS | Carrier Pre-Select | Carrier preselect is a term relating to the telecommunications industry. It is a method of routing calls for least-cost routing (LCR) without the need for programming of PBX telephone system.

This is the process whereby a telephone subscriber whose telephone line is maintained by one company, usually a former monopoly provider (e.g. BT), can choose to have some of their calls automatically routed across a different telephone company's network (e.g. Talk Talk) without needing to enter a special code or special equipment. |

CRC | cyclic redundancy check | A cyclic redundancy check (CRC) is an error-detecting code commonly used in digital networks and storage devices to detect accidental changes to raw data. Blocks of data entering these systems get a short check value attached, based on the remainder of a polynomial division of their contents. On retrieval, the calculation is repeated and, in the event the check values do not match, corrective action can be taken against data corruption. CRCs can be used for error correction (see bitfilters).

CRCs are so called because the check (data verification) value is a redundancy (it expands the message without adding information) and the algorithm is based on cyclic codes. CRCs are popular because they are simple to implement in binary hardware, easy to analyze mathematically, and particularly good at detecting common errors caused by noise in transmission channels. Because the check value has a fixed length, the function that generates it is occasionally used as a hash function. |

CRC Cards | Class Responsibility Collaborator Cards | CRC Cards are an object oriented design technique teams can use to discuss what a class should know and do and what other classes it interacts with.

CRC cards are usually created from index cards. Members of a brainstorming session will write up one CRC card for each relevant class/object of their design. The card is partitioned into three areas: On top of the card, the class name On the left, the responsibilities of the class

On the right, collaborators (other classes) with which this class interacts to fulfill its responsibilities

|

CSCF | Call Session Control Function | Several roles of SIP servers or proxies, collectively called Call Session Control Function (CSCF), are used to process SIP signaling packets in the IMS.

A Proxy-CSCF (P-CSCF) is a SIP proxy that is the first point of contact for the IMS terminal. It can be located either in the visited network (in full IMS networks) or in the home network (when the visited network is not IMS compliant yet). Some networks may use a Session Border Controller (SBC) for this function. The P-CSCF is at its core a specialized SBC for the User–network interface which not only protects the network, but also the IMS terminal. The use of an additional SBC between the IMS terminal and the P-CSCF is unnecessary and infeasible due to the signaling being encrypted on this leg. The terminal discovers its P-CSCF with either DHCP, or it may be configured (e.g. during initial provisioning or via a 3GPP IMS Management Object (MO)) or in the ISIM or assigned in the PDP Context (in General Packet Radio Service (GPRS)). An Interrogating-CSCF (I-CSCF) is another SIP function located at the edge of an administrative domain. Its IP address is published in the Domain Name System (DNS) of the domain (using NAPTR and SRV type of DNS records), so that remote servers can find it, and use it as a forwarding point (e.g., registering) for SIP packets to this domain. A Serving-CSCF (S-CSCF) is the central node of the signaling plane. It is a SIP server, but performs session control too. It is always located in the home network. It uses Diameter Cx and Dx interfaces to the HSS to download user profiles and upload user-to-S-CSCF associations (the user profile is only cached locally for processing reasons and is not changed). All necessary subscriber profile information is loaded from the HSS.

|

csh |

| The C shell (csh or the improved version, tcsh) is a Unix shell created by Bill Joy while he was a graduate student at University of California, Berkeley in the late 1970s. It has been widely distributed, beginning with the 2BSD release of the Berkeley Software Distribution (BSD) that Joy began distributing in 1978. Other early contributors to the ideas or the code were Michael Ubell, Eric Allman, Mike O'Brien and Jim Kulp.

The C shell is a command processor typically run in a text window, allowing the user to type commands. The C shell can also read commands from a file, called a script. Like all Unix shells, it supports filename wildcarding, piping, here documents, command substitution, variables and control structures for condition-testing and iteration. What differentiated the C shell from others, especially in the 1980s, were its interactive features and overall style. Its new features made it easier and faster to use. The overall style of the language looked more like C and was seen as more readable. |

CSI | Customer Site Interconnect | In telecommunications, interconnection is the physical linking of a carrier's network with equipment or facilities not belonging to that network. The term may refer to a connection between a carrier's facilities and the equipment belonging to its customer, or to a connection between two (or more) carriers.

|

CSMA | Carrier-sense multiple access | Carrier-sense multiple access (CSMA) is a media access control (MAC) protocol in which a node verifies the absence of other traffic before transmitting on a shared transmission medium, such as an electrical bus or a band of the electromagnetic spectrum.

A transmitter attempts to determine whether another transmission is in progress before initiating a transmission using a carrier-sense mechanism. That is, it tries to detect the presence of a carrier signal from another node before attempting to transmit. If a carrier is sensed, the node waits for the transmission in progress to end before initiating its own transmission. Using CSMA, multiple nodes may, in turn, send and receive on the same medium. Transmissions by one node are generally received by all other nodes connected to the medium.

Variations on basic CSMA include addition of collision-avoidance, collision-detection and collision-resolution techniques. |

CSS |

| Cascading Style Sheets (CSS) is a style sheet language used for describing the presentation of a document written in a markup language like HTML.[1] CSS is a cornerstone technology of the World Wide Web, alongside HTML and JavaScript.

CSS is designed to enable the separation of presentation and content, including layout, colors, and fonts. This separation can improve content accessibility, provide more flexibility and control in the specification of presentation characteristics, enable multiple web pages to share formatting by specifying the relevant CSS in a separate .css file, and reduce complexity and repetition in the structural content.

Separation of formatting and content also makes it feasible to present the same markup page in different styles for different rendering methods, such as on-screen, in print, by voice (via speech-based browser or screen reader), and on Braille-based tactile devices. CSS also has rules for alternate formatting if the content is accessed on a mobile device.

The name cascading comes from the specified priority scheme to determine which style rule applies if more than one rule matches a particular element. This cascading priority scheme is predictable.

The CSS specifications are maintained by the World Wide Web Consortium (W3C). Internet media type (MIME type) text/css is registered for use with CSS by RFC 2318 (March 1998). The W3C operates a free CSS validation service for CSS documents.

In addition to HTML, other markup languages support the use of CSS, including XHTML, plain XML, SVG, and XUL |

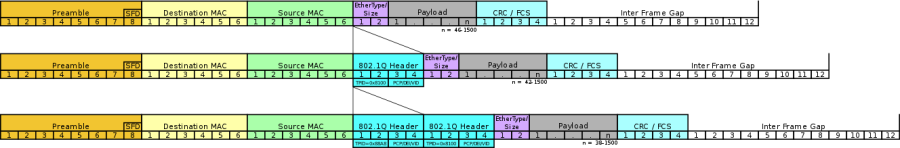

C-Tag | Subscriber VLAN Tag | The IEEE standard 802.1ad provides for double-tagging by service providers so that they can use VLANs allocated internally together with traffic already tagged as VLANs by service provider customers.

In this double tagging, the C-Tag (customer tag) is the inner tag set by the customer. The S-Tag is the outer tag next to the MAC address.

|

Customer Development |

| Customer development is a four-step framework that provides a way to use a scientific approach to validate assumptions about your product and business. (learn more) |

CVSD | Continuously variable slope delta modulation | Continuously variable slope delta modulation (CVSD or CVSDM) is a voice coding method. It is a delta modulation with variable step size (i.e., special case of adaptive delta modulation), first proposed by Greefkes and Riemens in 1970.

CVSD encodes at 1 bit per sample, so that audio sampled at 16 kHz is encoded at 16 kbit/s.

The encoder maintains a reference sample and a step size. Each input sample is compared to the reference sample. If the input sample is larger, the encoder emits a 1 bit and adds the step size to the reference sample. If the input sample is smaller, the encoder emits a 0 bit and subtracts the step size from the reference sample. The encoder also keeps the previous N bits of output (N = 3 or N = 4 are very common) to determine adjustments to the step size; if the previous N bits are all 1s or 0s, the step size is increased. Otherwise, the step size is decreased (usually in an exponential manner, with {\displaystyle \tau } \tau being in the range of 5 ms). The step size is adjusted for every input sample processed.

To allow for bit errors to fade out and to allow (re)synchronization to an ongoing bitstream, the output register (which keeps the reference sample) is normally realized as a leaky integrator with a time constant ( {\displaystyle \tau } \tau ) of about 1 ms.

The decoder reverses this process, starting with the reference sample, and adding or subtracting the step size according to the bit stream. The sequence of adjusted reference samples are the reconstructed waveform, and the step size is adjusted according to the same all-1s-or-0s logic as in the encoder.

Adaptation of step size allows one to avoid slope overload (step of quantization increases when the signal rapidly changes) and decreases granular noise when the signal is constant (decrease of step of quantisation).

CVSD is sometimes called a compromise between simplicity, low bitrate, and quality. Common bitrates are 9.6–128 kbit/s.

Like other delta-modulation techniques, the output of the decoder does not exactly match the original input to the encoder. |